Web scraping is a hot topic these days, especially as more businesses and individuals look to harness the vast amounts of data available online. Ever wondered how companies gather all that useful data from the web? Well, you’re in the right place. In this guide, we’ll explore everything you need to know about web scraping, from what it is to how it works, the tools you can use, and the legal and ethical considerations involved.

What is Web Scraping?

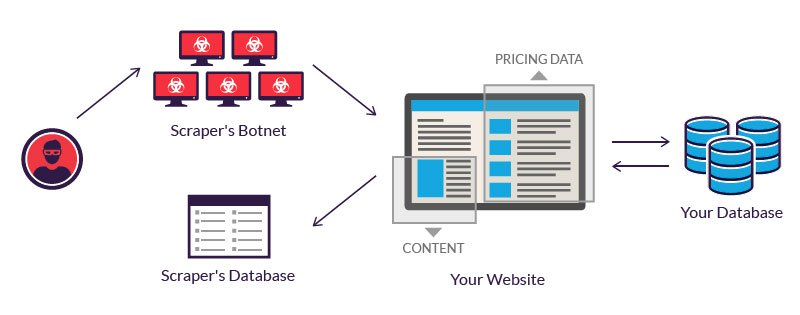

Web scraping, at its core, is the process of automatically extracting information from websites. It involves fetching the HTML of a webpage and then extracting data from it. This data can be anything from text, images, to structured data stored in tables. Web scraping is essential in today’s data-driven world, allowing businesses to gather large volumes of information quickly and efficiently.

How Does Web Scraping Work?

Step-by-Step Process

- Access the Website: The web scraper sends an HTTP request to the website’s server to access the web pages.

- Retrieve HTML Content: The server responds by sending the HTML content of the page.

- Parse the HTML: The scraper parses the HTML content to identify and extract the required data.

- Store the Data: Finally, the extracted data is stored in a structured format like CSV, JSON, or directly in a database.

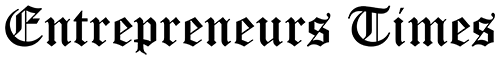

Tools and Technologies Used

Various tools and technologies facilitate web scraping, each with its unique features and capabilities. Popular tools include:

- Beautiful Soup: A Python tool used for extracting and navigating data from HTML and XML files.

- Scrapy: An open-source web-crawling framework for Python.

- Octoparse: A powerful web scraping tool with a visual interface.

- Selenium: A software suite that automates web browsers, commonly employed to scrape content that is dynamically generated.

Different Methods of Web Scraping

Manual Scraping

Manual scraping involves copying and pasting data from websites. While straightforward, it’s time-consuming and impractical for large-scale data extraction.

Automated Tools

Automated tools are software solutions designed to scrape data efficiently. They can handle large volumes of data and are much faster than manual methods.

APIs for Data Extraction

Some websites provide APIs (Application Programming Interfaces) that allow users to access data in a structured format. APIs are reliable and efficient but require proper authorization and adherence to usage limits.

Popular Web Scraping Tools

Beautiful Soup

Beautiful Soup is a Python library that makes it easy to scrape information from web pages. It creates parse trees from page source codes that can be used to extract data easily.

Scrapy

Scrapy is an open-source framework that provides a complete set of tools for web scraping. It’s highly efficient and customizable, suitable for complex scraping tasks.

Octoparse

Octoparse is a user-friendly web scraping tool that requires no coding. Its visual interface allows users to point and click to extract data from websites easily.

Selenium

Selenium is primarily used for testing web applications but is also popular for scraping dynamic content that loads with JavaScript.

Advantages of Web Scraping

Data Collection at Scale

Web scraping allows for the collection of massive amounts of data from multiple sources quickly, providing a competitive edge in data-driven decision-making.

Efficiency and Speed

Automated tools can scrape data much faster than humans, significantly reducing the time and effort required for data collection.

Competitive Advantage

Businesses can gain valuable insights into market trends, competitor pricing, and consumer behavior through web scraping, giving them a competitive advantage.

Challenges and Limitations

Legal and Ethical Concerns

Web scraping often faces legal challenges, especially when it involves scraping data from websites without permission. It’s crucial to understand the legal implications before starting any scraping project.

Technical Barriers

Scraping complex websites with dynamic content can be technically challenging and may require advanced knowledge of web technologies and programming.

Data Quality Issues

Ensuring the accuracy and reliability of scraped data is vital. Poor-quality data can lead to incorrect insights and decisions.

Legal Aspects of Web Scraping

Understanding Terms of Service

Most websites have terms of service that outline how their data can be used. Scraping data without adhering to these terms can result in legal consequences.

Intellectual Property Concerns

Scraping data that is copyrighted or owned by others can lead to intellectual property disputes. It’s important to respect the ownership of content.

Cases and Precedents

Various legal cases have shaped the landscape of web scraping. Familiarity with these cases can help in understanding the risks and legal boundaries.

Ethical Considerations in Web Scraping

Respecting Privacy

Web scraping should always respect user privacy. Avoid scraping personal data unless it is publicly available and permissible.

Avoiding Harm

Ensure that the scraping process does not harm the target website, such as by overloading its servers with too many requests.

Ethical Guidelines

Following ethical guidelines, like those provided by data privacy organizations, can help ensure responsible web scraping practices.

Common Applications of Web Scraping

Market Research

Companies use web scraping to gather data on market trends, customer preferences, and competitor activities to inform their strategies.

Price Comparison

Web scraping enables the collection of pricing data from various e-commerce sites, helping consumers find the best deals and businesses to adjust their pricing strategies.

Content Aggregation

Content aggregation websites use web scraping to collect and display information from multiple sources, providing a comprehensive view of specific topics.

Best Practices for Effective Web Scraping

Respect Website Policies

Always check and comply with the website’s terms of service to avoid legal issues.

Avoiding Detection

To avoid being blocked by websites, use techniques like rotating IP addresses and setting appropriate request intervals.

Data Cleaning and Processing

Clean and process the scraped data to ensure it is accurate, complete, and ready for analysis.

Future Trends in Web Scraping

AI and Machine Learning Integration

AI and machine learning are transforming web scraping by enabling more sophisticated data extraction and analysis capabilities.

Increased Automation

The future of web scraping involves greater automation, reducing the need for human intervention and increasing efficiency.

Enhanced Data Privacy Measures

As data privacy concerns grow, new measures and technologies are being developed to ensure that web scraping complies with privacy regulations.

Case Studies

Successful Use Cases

Various companies have successfully used web scraping to gain valuable insights and drive their business strategies. For example, a retailer might use scraped data to track competitor prices and adjust its pricing in real time.

Lessons Learned

Learning from the challenges and successes of others can help improve your web scraping practices and avoid common pitfalls.

Conclusion

Web scraping is a powerful tool that can unlock valuable insights from the vast amount of data available online. While it offers numerous benefits, it also comes with challenges and ethical considerations. By understanding the legal landscape and following best practices, you can effectively and responsibly leverage web scraping to enhance your business strategies.